Current projects

AutoAssist – Artificial Intelligence for Assist-as-needed Rehabilitation Therapies

(BMBF)

AutoAssist is a cooperation between us, the IBMT Fraunhofer, Ottobock, NEEEU! Spaces, Deutsche Telekom MMS and Teigler GmbH.

AutoAssist is a cooperation between us, the IBMT Fraunhofer, Ottobock, NEEEU! Spaces, Deutsche Telekom MMS and Teigler GmbH.

The University Klinikum Erlangen, Stroke Research Unit, is involved as our clinical partner.

We will be using ultrasound scanning, advanced intent detection, and virtual reality, to try and have stroke patients design their own therapy tasks, and automatically tune the therapy according to their own progress.

HIT-Reha- Human Impedance control for Tailored Rehabilitation

(DFG Sachbeihilfe CA1389/3‐1, project #505327336)

Functional Electrical Stimulation has long been recognized as a tool for rehabilitation neurological patients, but its use in task-level applications is hindered by the limited precision, bandwidth and achievable force output available. The integration of FES and wearable robotics could solve these issues.

Starting in July 2023, the AIROB lab, together with its partners at the ZITI lab and the Spinal Center at the University of Heidelberg, will start work on the DFG project Human Impedance control for Tailored Rehabilitation, or HIT-Reha.

The project’s main focus will be to develop cutting-edge lightweight and wearable devices designed to assist the action of muscles by providing external forces, as well as to amplify the signals of efferent nerves by injecting stimulation currents as needed. This could help patients suffering from various forms of paralysis regain their lost functionality.

IntelliMan – AI-Powered Manipulation System for Advanced Robotic Service, Manufacturing and Prosthetics

(EU Horizon: 101070136)

A key challenge in intelligent robotics is creating robots that are capable of directly interacting with the world around them to achieve their goals. The main objective of the IntelliMan project is to have a robot efficiently learn to manipulate in a purposeful and highly performant way.

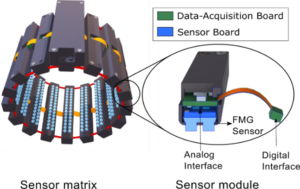

The focus of AIROB Lab in this project is on intent detection in the field of human-machine interaction through research in the areas of sensor modalities, biosignal analysis and machine learning. We apply this research in particular to the control of virtual and real upper limb prostheses. On the one hand, this includes the development of new algorithms and methods and, on the other hand, the validation on test persons (with and without limb differences) within user studies.

Furthermore, we are leading the work package Shared Autonomy between humans and robots. Here we apply findings from our research to other use cases with the aim of increasing safety in human-robot collaboration.

Funded by the European Union. Views and opinions expressed

are however those of the author(s) only and do not necessarily

reflect those of the European Union or CNECT. Neither the

European Union nor the granting authority can be held responsible for them.

Past projects

VVITA – Validation of the Virtual Therapy Arm

A 3yrs clinical and commercial validation of phantom pain and stroke rehab therapy in virtual reality.

A 3yrs clinical and commercial validation of phantom pain and stroke rehab therapy in virtual reality.

Check out this webpage, this video and this video!

Project performed at the Institute of Robotics and Mechatronics of the DLR – German Aerospace Center, Oberpfaffenhofen, Germany.

Deep-Hand – Deep sensing and deep learning for myocontrol of the upper limb

2yrs basic research project about the detection and interpretation of deep muscular activity for prosthetic myocontrol.

2yrs basic research project about the detection and interpretation of deep muscular activity for prosthetic myocontrol.

Project performed at the Institute of Robotics and Mechatronics of the DLR – German Aerospace Center, Oberpfaffenhofen, Germany.